AMD Unveils Instinct MI100 CDNA GPU Accelerator, The World’s Fastest HPC GPU With Highest Double-Precision Horsepower

AMD has officially announced its next-generation CDNA GPU-based Instinct MI100 accelerator which it calls the fastest HPC GPU in the world. The Instinct MI100 is designed to offer world’s fastest double-precision compute capabilities and also deliver insane amounts of GPU performance for AI Deep Learning workloads.

AMD Instinct MI100 32 GB HPC Accelerator Official, The World’s Fastest HPC GPU Based on 1st Gen CDNA Architecture

AMD’s Instinct MI100 will be utilizing the CDNA architecture which is entirely different than the RDNA architecture that gamers will have access to later this month. The CDNA architecture has been designed specifically for the HPC segment and will be pitted against NVIDIA’s Ampere A100 & similar accelerator cards.

Some of the key highlights of the AMD Instinct MI100 GPU accelerator include:

- All-New AMD CDNA Architecture- Engineered to power AMD GPUs for the exascale era and at the heart of the MI100 accelerator, the AMD CDNA architecture offers exceptional performance and power efficiency

- Leading FP64 and FP32 Performance for HPC Workloads – Delivers industry-leading 11.5 TFLOPS peak FP64 performance and 23.1 TFLOPS peak FP32 performance, enabling scientists and researchers across the globe to accelerate discoveries in industries including life sciences, energy, finance, academics, government, defense, and more.

- All-New Matrix Core Technology for HPC and AI – Supercharged performance for a full range of single and mixed-precision matrix operations, such as FP32, FP16, bFloat16, Int8, and Int4, engineered to boost the convergence of HPC and AI.

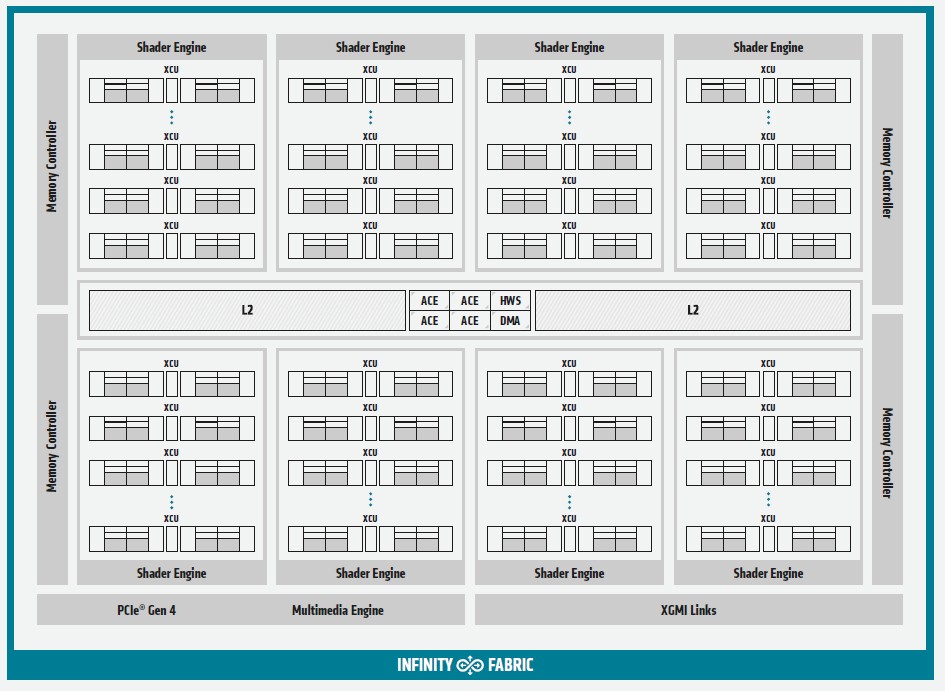

- 2nd Gen AMD Infinity Fabric Technology – Instinct MI100 provides ~2x the peer-to-peer (P2P) peak I/O bandwidth over PCIe 4.0 with up to 340 GB/s of aggregate bandwidth per card with three AMD Infinity Fabric Links. In a server, MI100 GPUs can be configured with up to two fully-connected quad GPU hives, each providing up to 552 GB/s of P2P I/O bandwidth for fast data sharing.

- Ultra-Fast HBM2 Memory– Features 32GB High-bandwidth HBM2 memory at a clock rate of 1.2 GHz and delivers an ultra-high 1.23 TB/s of memory bandwidth to support large data sets and help eliminate bottlenecks in moving data in and out of memory.

- Support for Industry’s Latest PCIe Gen 4.0 – Designed with the latest PCIe Gen 4.0 technology support providing up to 64GB/s peak theoretical transport data bandwidth from CPU to GPU.

AMD Instinct MI100 ‘CDNA GPU’ Specifications – 7680 Cores & 32 GB HBM2 VRAM

The AMD Instinct MI100 features the 7nm CDNA GPU which packs a total of 120 Compute Units or 7680 stream processors. Internally referred to as Arcturus, the CDNA GPU powering the instinct MI100 is said to measure at around 720mm2. The GPU has a clock speed configured around 1500 MHz and delivers a peak performance throughput of 11.5 TFLOPs in FP64, 23.1 TFLOPs in FP32, and a massive 185 TFLOPs in FP16 compute workloads. The accelerator will feature a total power draw of 300 Watts.

For memory, AMD will be equipping its Radeon Instinct MI100 HPC accelerator with a total of 32 GB HBM2 memory. The graphics card will be configured in 4 and 8 GPU configurations & communicate through the new Infinity Fabric X16 interconnect which has a rated bandwidth of 276 GB/s. AMD is using HBM2 chip rated to provide an effective bandwidth of 1.23 GB/s while NVIDIA’s A100 features HBM2e memory dies with 1.536 GB/s bandwidth.

AMD Radeon Instinct Accelerators 2020

| Accelerator Name | AMD Radeon Instinct MI6 | AMD Radeon Instinct MI8 | AMD Radeon Instinct MI25 | AMD Radeon Instinct MI50 | AMD Radeon Instinct MI60 | AMD Radeon Instinct MI100 |

|---|---|---|---|---|---|---|

| GPU Architecture | Polaris 10 | Fiji XT | Vega 10 | Vega 20 | Vega 20 | Arcturus |

| GPU Process Node | 14nm FinFET | 28nm | 14nm FinFET | 7nm FinFET | 7nm FinFET | 7nm FinFET |

| GPU Cores | 2304 | 4096 | 4096 | 3840 | 4096 | 7680 |

| GPU Clock Speed | 1237 MHz | 1000 MHz | 1500 MHz | 1725 MHz | 1800 MHz | ~1500 MHz |

| FP16 Compute | 5.7 TFLOPs | 8.2 TFLOPs | 24.6 TFLOPs | 26.5 TFLOPs | 29.5 TFLOPs | 185 TFLOPs |

| FP32 Compute | 5.7 TFLOPs | 8.2 TFLOPs | 12.3 TFLOPs | 13.3 TFLOPs | 14.7 TFLOPs | 23.1 TFLOPs |

| FP64 Compute | 384 GFLOPs | 512 GFLOPs | 768 GFLOPs | 6.6 TFLOPs | 7.4 TFLOPs | 11.5 TFLOPs |

| VRAM | 16 GB GDDR5 | 4 GB HBM1 | 16 GB HBM2 | 16 GB HBM2 | 32 GB HBM2 | 32 GB HBM2 |

| Memory Clock | 1750 MHz | 500 MHz | 945 MHz | 1000 MHz | 1000 MHz | 1200 MHz |

| Memory Bus | 256-bit bus | 4096-bit bus | 2048-bit bus | 4096-bit bus | 4096-bit bus | 4096-bit bus |

| Memory Bandwidth | 224 GB/s | 512 GB/s | 484 GB/s | 1 TB/s | 1 TB/s | 1.23 TB/s |

| Form Factor | Single Slot, Full Length | Dual Slot, Half Length | Dual Slot, Full Length | Dual Slot, Full Length | Dual Slot, Full Length | Dual Slot, Full Length |

| Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling |

| TDP | 150W | 175W | 300W | 300W | 300W | 300W |

AMD’s Instinct MI100 ‘CDNA GPU’ Performance Numbers, An FP32 & FP64 Compute Powerhouse

In terms of performance, the AMD Instinct MI100 was compared to the NVIDIA Volta V100 and the NVIDIA Ampere A100 GPU accelerators. Comparing the numbers, the Instinct MI100 offers a 19.5% uplift in FP64 and an 18.5% uplift in FP32 performance. In FP16 performance, the NVIDIA A100 has a 69% advantage over the Instinct MI100.

Surprisingly, NVIDIA’s numbers with Sparsity are still higher than what AMD can crunch. It’s 19.5 TFLOPs vs 11.5 TFLOPs in FP64, 156 TFLOPs vs 23.1 TFLOPs in FP32, and 624 TFLOPs vs 185 TFLOPs in FP16.

AMD Instinct MI100 vs NVIDIA’s Ampere A100 HPC Accelerator

In terms of actual workload performance, the AMD Instinct MI100 offers a 2.1x perf/$ ratio in FP64 and FP32 workloads. Once again, these are numbers comparing the non-sparsity performance that the NVIDIA A100 has to offer. AMD also provides some performance statistics in various HPC work- loads such as NAMD, CHOLLA, and GESTS where it is up to 3x/1.4x/2.6x faster than the Volta-based V100 GPU accelerator. Compared to the MI60, the Instinct MI100 offers a 40% improvement in the PIConGPU workload.

According to AMD, its Instinct MI100 GPU accelerator will be available through OEMs and ODMs and integrated with the first systems by the end of 2020. The systems will be packing AMD’s EPYC CPUs and Instinct accelerators. Some partners include HPE, Dell, Supermicro, and Gigabyte who already have servers based on the new Instinct MI100 accelerator ready to ship out. There was no word regarding the price of this particular accelerator.